CKA를 준비하면서 공부한 요약 내용입니다.

Node name

- default:

kubectl-scheduler - 위 작업이 기본적으로 pod을 node에 할당해 줌

상태 확인

kubectl get nodenode=no1 2 3 4> k get no NAME STATUS ROLES AGE VERSION controlplane Ready control-plane,master 11m v1.20.0 node01 Ready <none> 9m48s v1.20.0

수동 배정

- To schedule node manually

NodeName1 2 3 4 5 6 7 8 9 10--- apiVersion: v1 kind: Pod metadata: name: nginx spec: containers: - image: nginx name: nginx nodeName: node01

Label & Selectors

Label

definition

1 2 3 4 5 6 7 8apiVersion: ~~ kind: ~~ metadata: name: ~~ labels: app: App1 function: Front-end sepc: ~~→ on

metadata: labelsimperative

1kubectl label node node01 key=value

Select

kubectl get pods --selector app=App1- several selector field

kubectl get pods --selector app=App1,env=dev

Replicaset

replica-definition.yaml1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21apiVersion: apps/v1 kind: Replcaset metadata: name: simple-webapp labels: app: App1 function: Front-end spec: replicas: 3 selector: matchLabels: app: App1 template: metadata: labels: app: App1 function: Front-end spec: containers: - name: simple-webapp image: simple-webapp- labels at the top are the labels of the replicaset itself.

1 2 3 4 5 6 7apiVersion: apps/v1 kind: Replcaset metadata: name: simple-webapp labels: app: App1 function: Front-end - in order to connect the replicaset to the pod, configure the selector field

1 2 3selector: matchLabels: app: App1

Annotations

- used to record other detail for informatively purpose

- eg) tool details

Taints, Tolerations

- taints

- set on the nodes

- toleartions

- set on the pods

- taints and toleration does not tell the pod to go to a particular node

- node to only accept pod with certain tolerations

Taints

설정

kubectl taint nodes node-name key=value:taint-effecttaint-effect:NoSchedule- not to be scheduled, if they do not tolerate the taint

PreferNoSchedule- system will be try to avoid placing a pod on the node, not guaranteed

NoExecute- new pods will not be scheduled on the node

- existing pods on the node will be evicted if the do not tolertae the taint

확인

- describe & grep

1 2k describe node node01 |grep -i taints Taints: <none>

제거

kubectl taint nodes node-name key=value:taint-effect-1kubectl taint nodes master/controlplane node-role.kubernetes.io/master:NoSchedule-

Tolerations

설정

| |

PODs to Node

- Node Selector

- Node Affinity

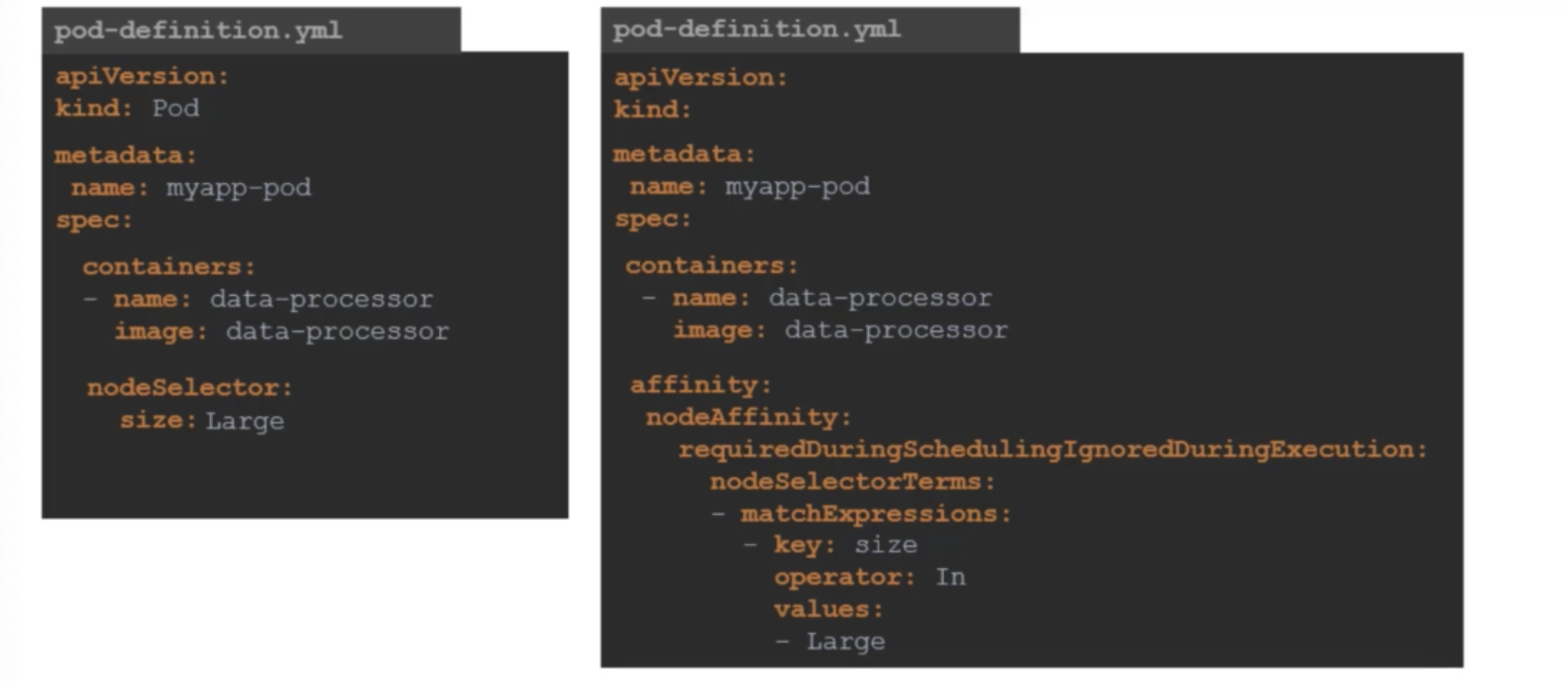

Node Selector

Label nodes

use

nodeSelectorwith label1 2 3 4 5 6 7 8 9 10apiVersion: kind: metadata: name: spec: containers: - name: image: nodeSelector: size: Largelimitations

- only use single label

- there should be complex constraints

Node Affinity

nodeSelectorvsaffinity- two are working equally, schedule pods to

Largenode.

- two are working equally, schedule pods to

설정

| |

operatorIn- guarantee to schedule in values

NotIn- guarantee not to schedule in values

Exists- given key exists or not

Node Affinity Types

- available

requireDuringSchedulingIgnoredDuringExecutionpreferredDuringSchedulingIgnoredDuringExecution

- planned

requireDuringSchedulingRequiredDuringExecution

- two states in the life cycle of pod

DuringSchedulingRequired- must be

Preferred- best to

DuringExecutionIgnored- if they are scheduled, will not impact them.

Required- pod will be evicted

Resource Limits

Resource Requests

설정

| |

- cpu:

- 0.1 == 100mi

- lower: 1mi

- memory

Resources Limits

설정

| |

- default

- 1v CPU

- 512Mi

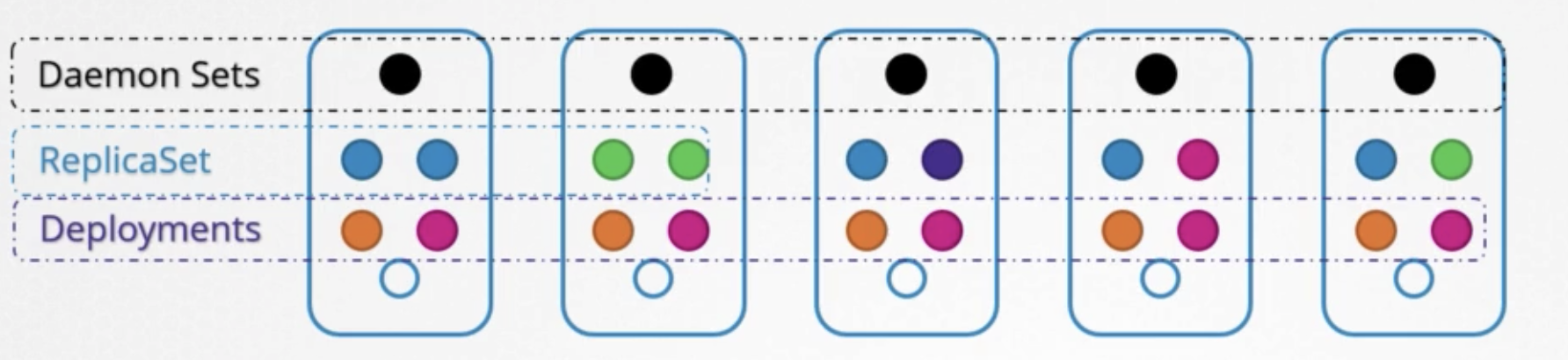

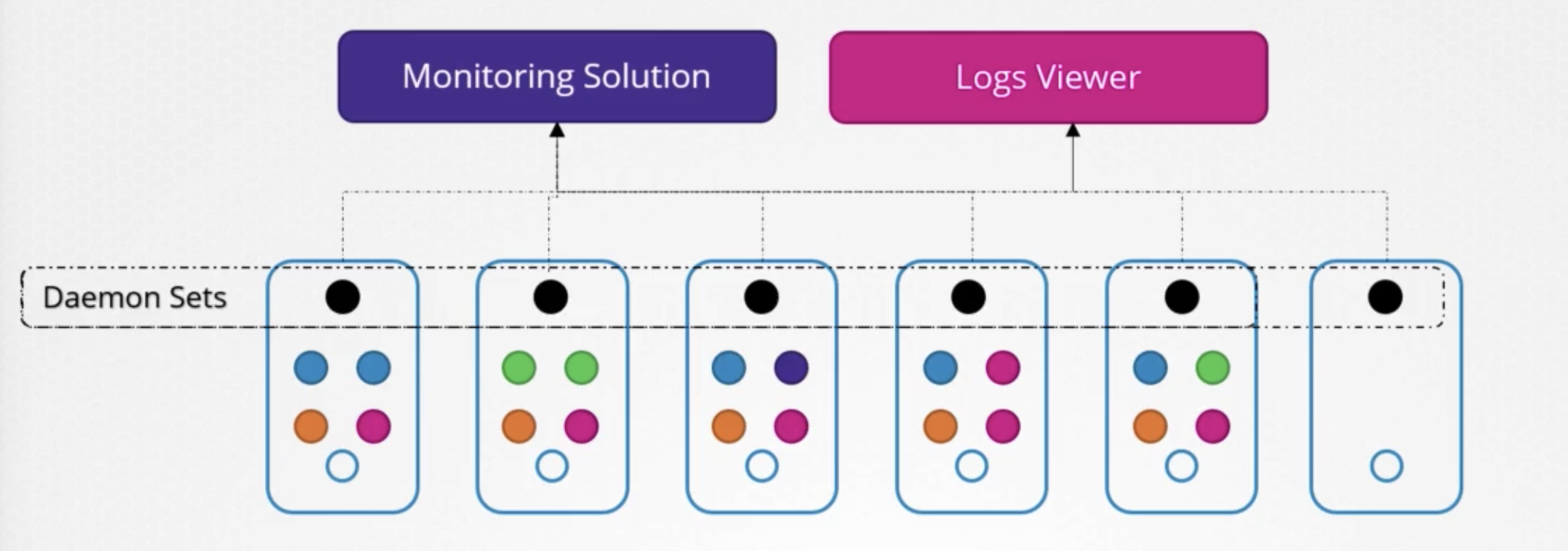

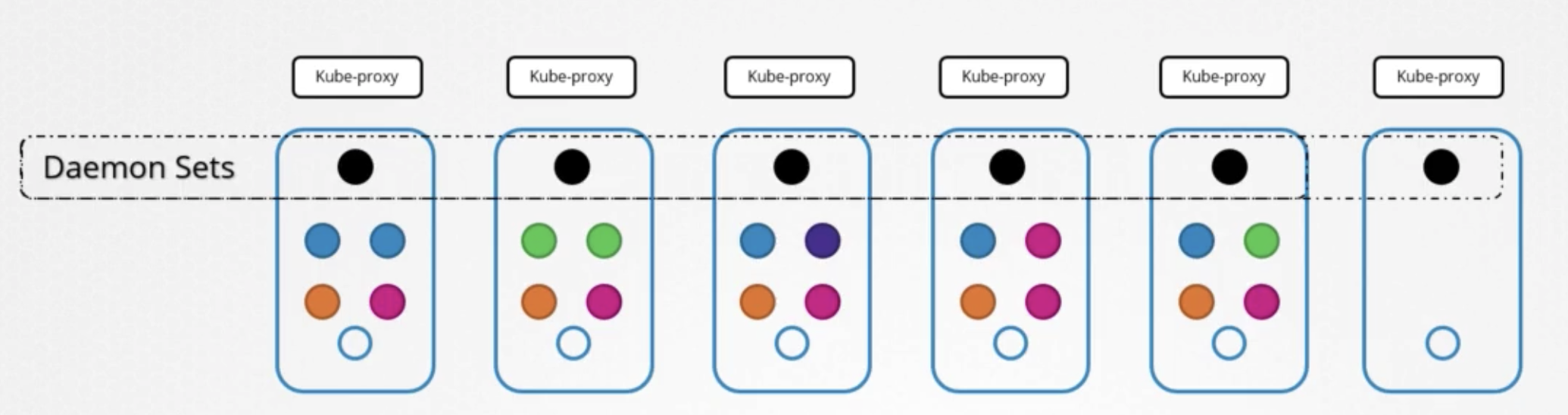

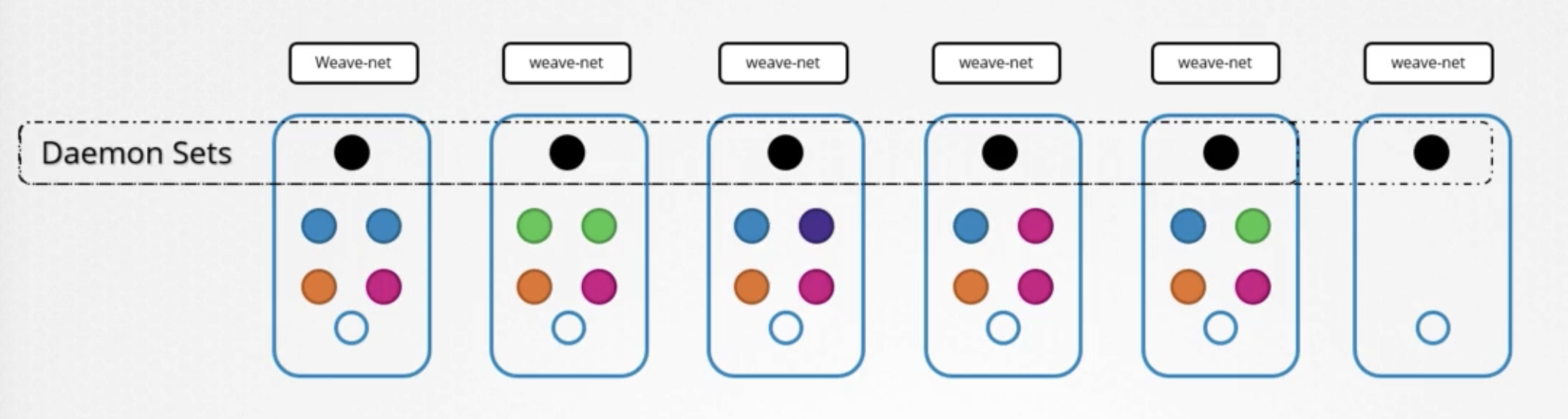

Daemon Sets

- one copy of the pod is always present in all nodes in the cluster

use case

- monitoring

- kube-proxy

- networking

생성

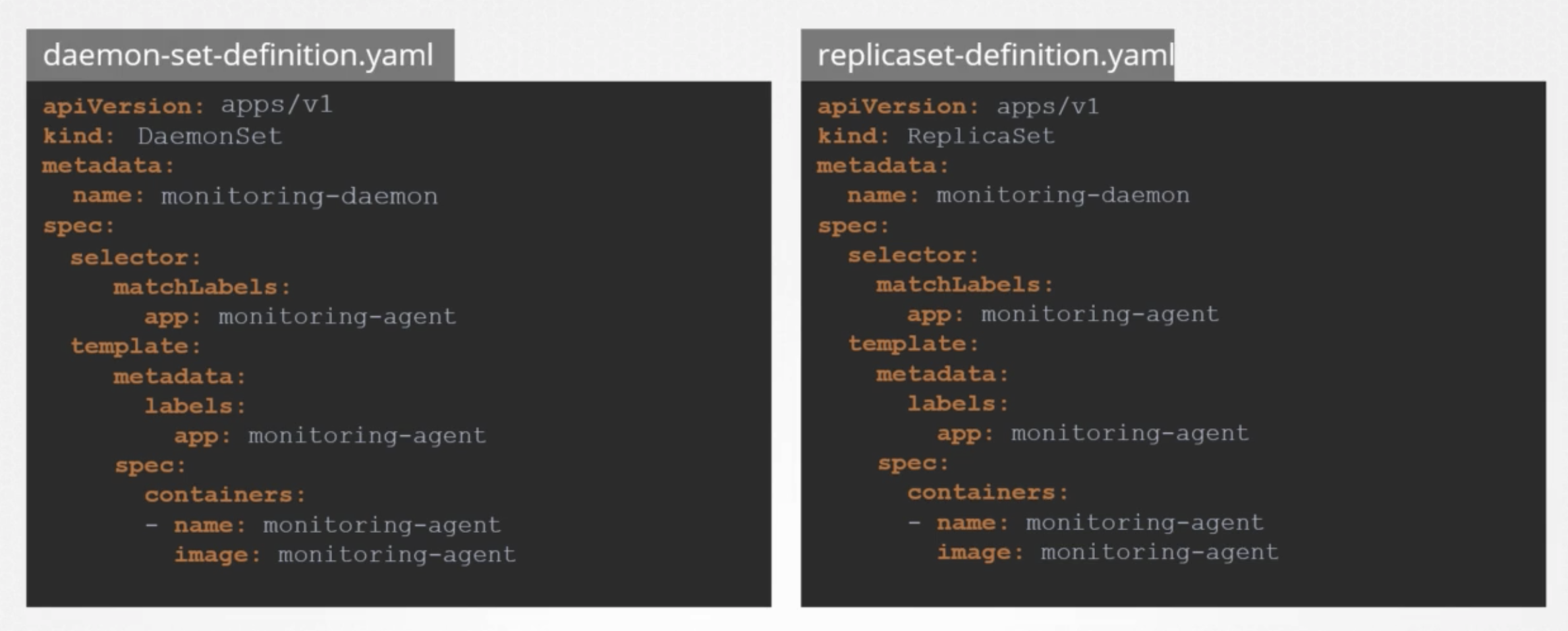

DaemonSetvsReplicaSet

definition

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16apiVersion: apps/v1 kind: DaemonSet metadata: name: monitoring-daemon spec: selector: matchLabels: app: monitoring-daemon template: metadata: labels: app: monitoring-daemon spec: contaiers: - name: monitoring-agent image: monitoring-agent

상태

kubectl get daemonsets

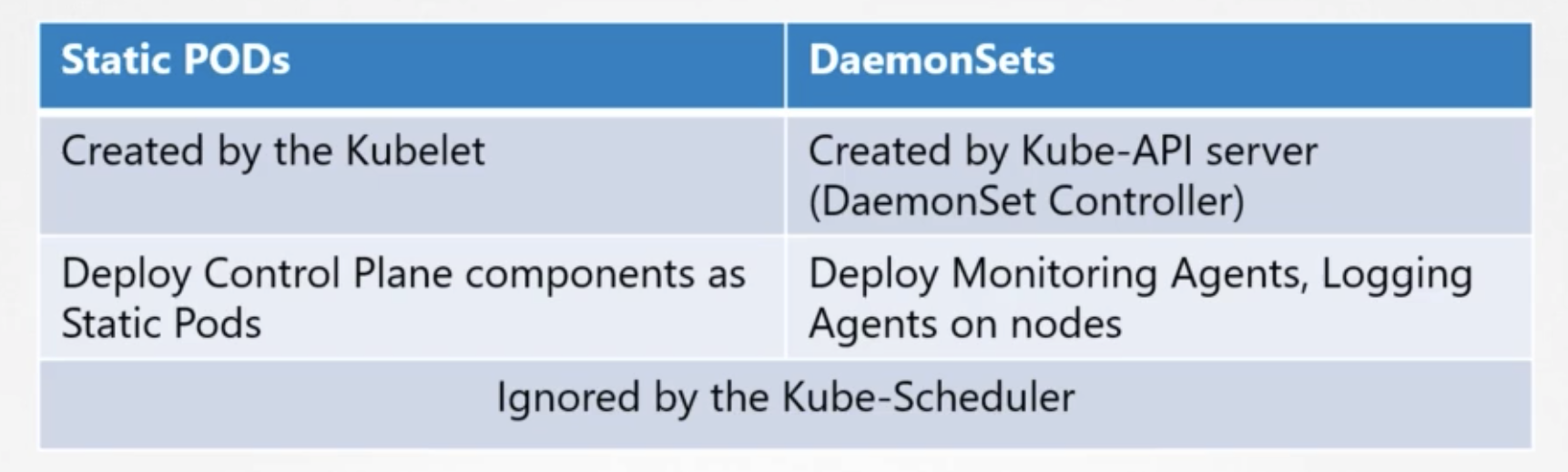

Static PODs

Static PODs vs DaemonSets

- static pod

- created directly by kubelet

Config

--pod-manifeset-path=/etc/Kubernetes/manifest--config=kubeconfig.yaml1 2# kubeconfig.yaml staticPodPath: /etc/Kubernetes/manifest

파일 위치

First idenity the kubelet config file:

| |

From the output we can see that the kubelet config file used is /var/lib/kubelet/config.yaml

Next, lookup the value assigned for staticPodPath:

| |

As you can see, the path configured is the /etc/kubernetes/manifests directory.

상태

kubectl get po -A-controlplaneappended pods

1 2 3 4 5 6 7 8 9 10 11 12> k get po -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-74ff55c5b-578df 1/1 Running 0 25m kube-system coredns-74ff55c5b-nnjdp 1/1 Running 0 25m kube-system etcd-controlplane 1/1 Running 0 25m kube-system kube-apiserver-controlplane 1/1 Running 0 25m kube-system kube-controller-manager-controlplane 1/1 Running 0 25m kube-system kube-flannel-ds-q7plw 1/1 Running 0 24m kube-system kube-flannel-ds-w5rnm 1/1 Running 0 25m kube-system kube-proxy-5dg8f 1/1 Running 0 24m kube-system kube-proxy-ld2qq 1/1 Running 0 25m kube-system kube-scheduler-controlplane 1/1 Running 0 25mgrep -i controlplane

1 2 3 4 5> k get po -A |grep -i controlplane kube-system etcd-controlplane 1/1 Running 0 27m kube-system kube-apiserver-controlplane 1/1 Running 0 27m kube-system kube-controller-manager-controlplane 1/1 Running 0 27m kube-system kube-scheduler-controlplane 1/1 Running 0 27m

삭제

First, let’s identify the node in which the pod called static-greenbox is created. To do this, run:

| |

From the result of this command, we can see that the pod is running on node01.

Next, SSH to node01 and identify the path configured for static pods in this node.

Important: The path need not be /etc/kubernetes/manifests.

Make sure to check the path configured in the kubelet configuration file.

| |

Here the staticPodPath is /etc/just-to-mess-with-you

Navigate to this directory and delete the YAML file:

| |

Exit out of node01 using CTRL + D or type exit. You should return to the controlplane node. Check if the static-greenbox pod has been deleted:

| |

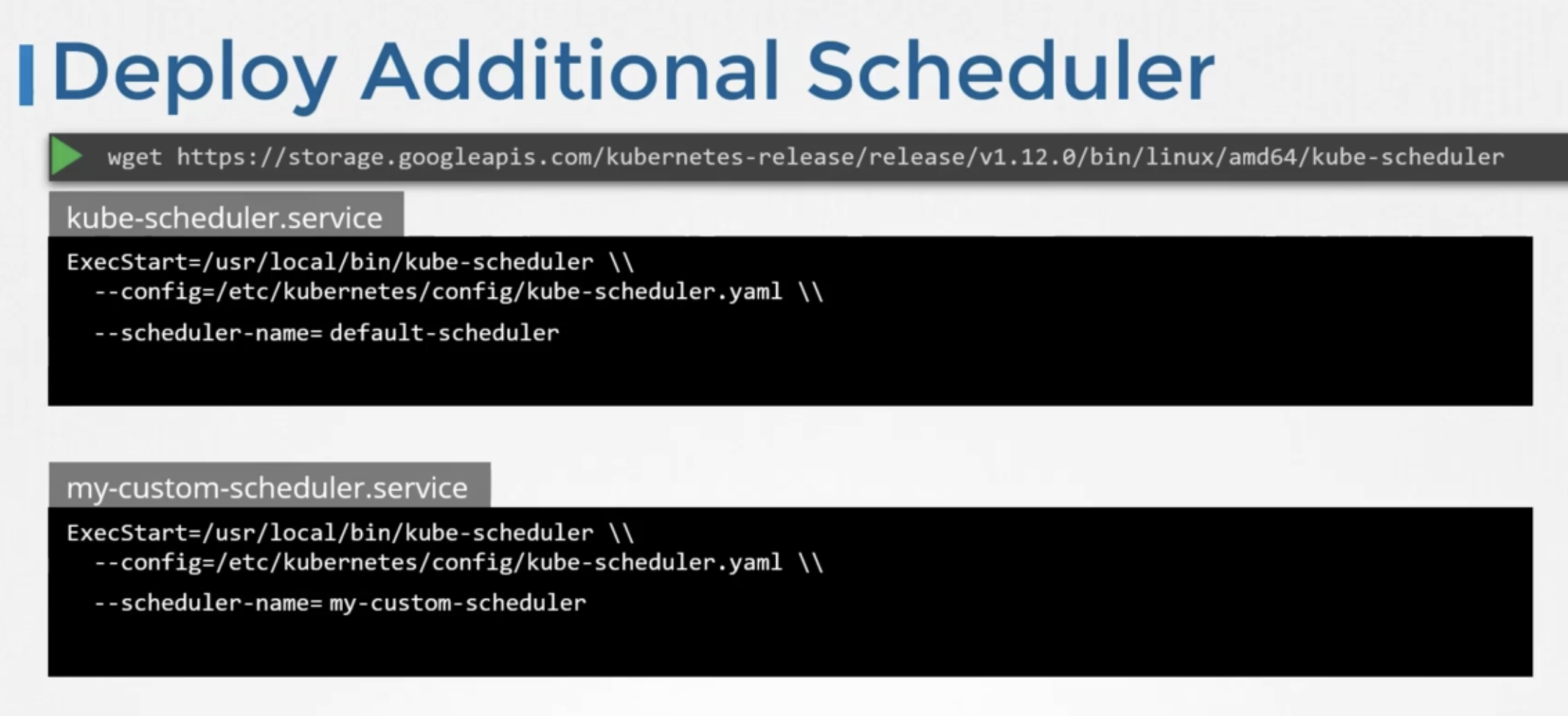

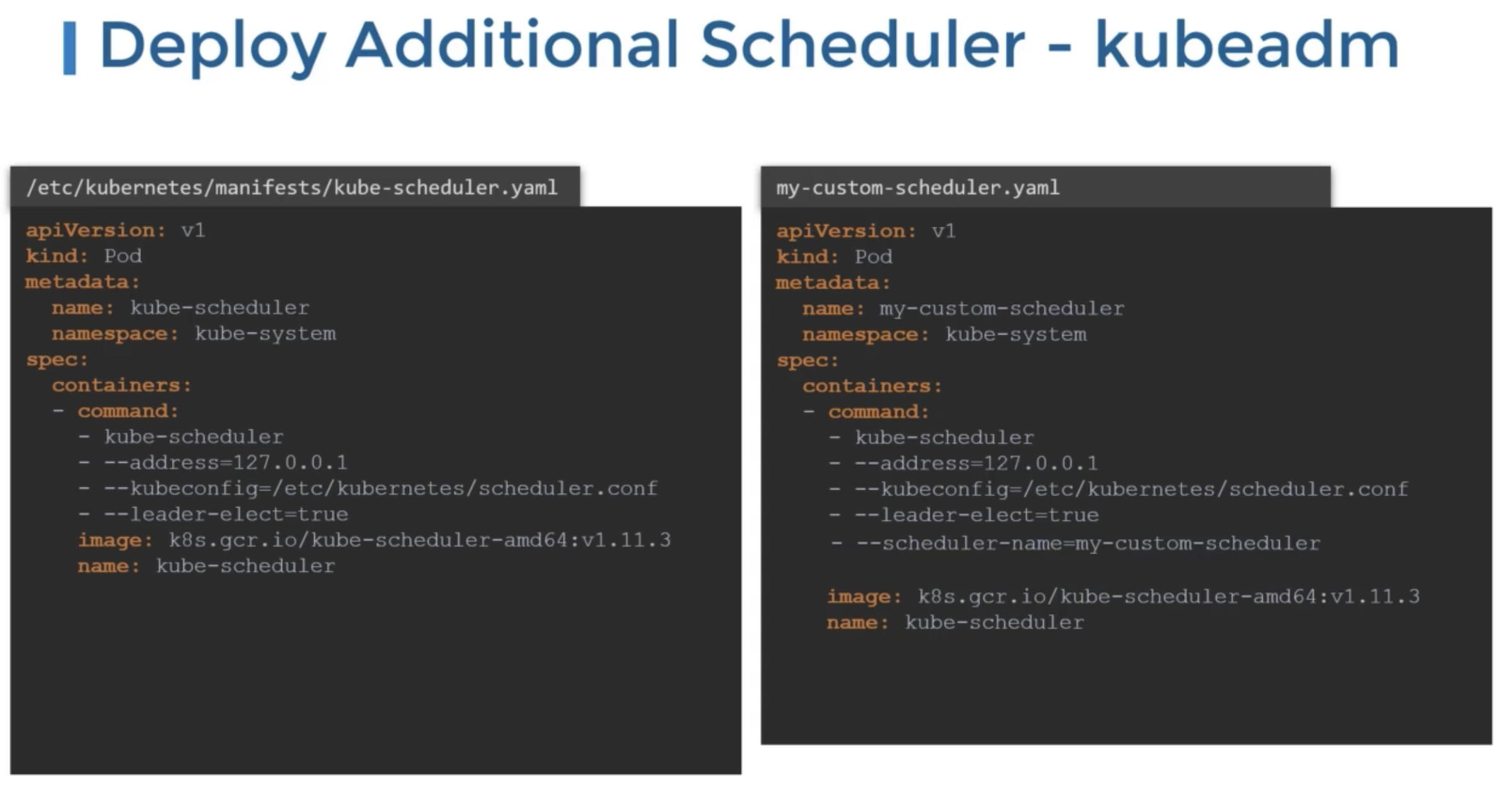

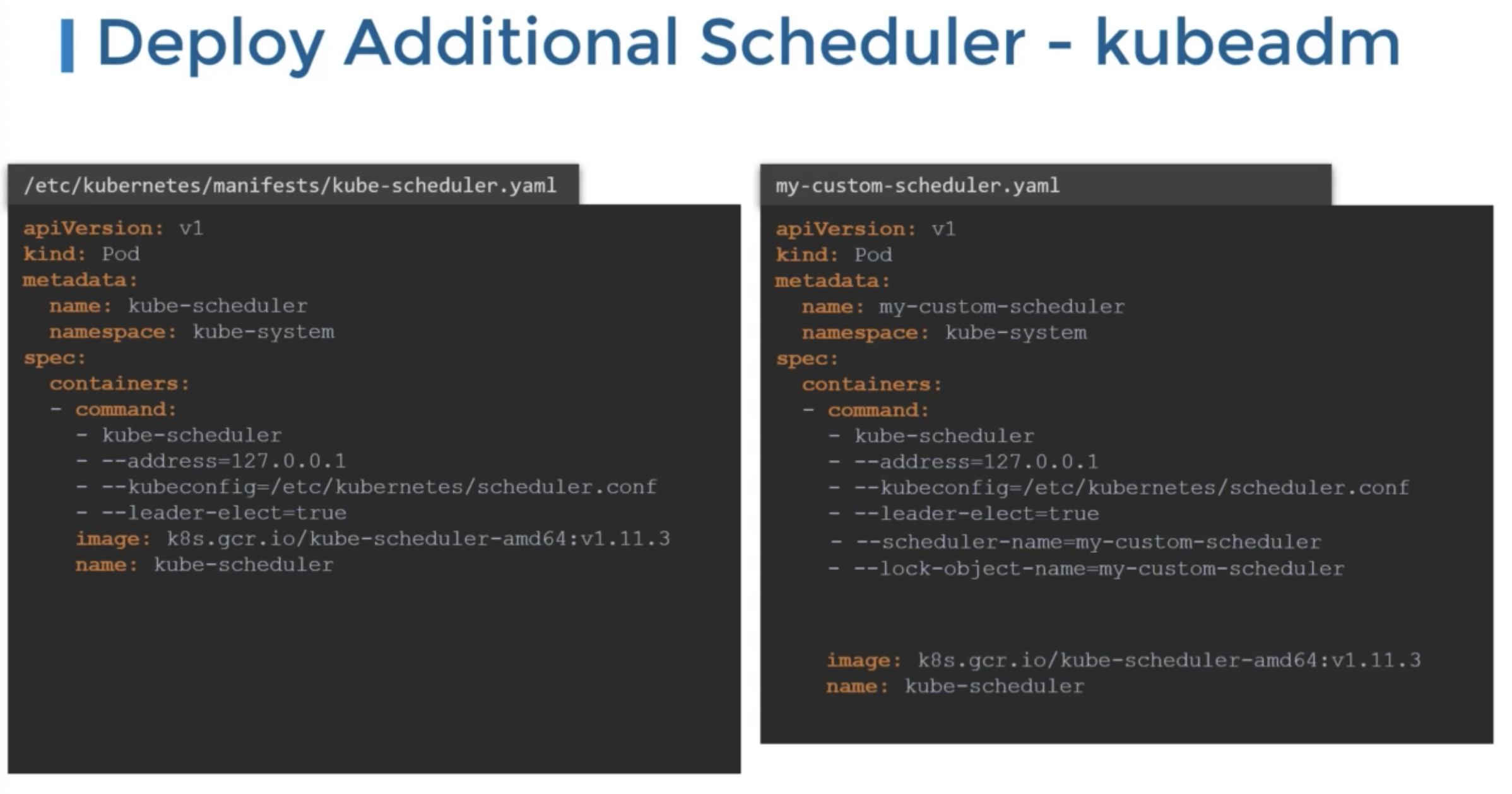

Multiple Schedulers

생성

- cli

- kubeadm

--leader-elect- used when multiple copies of the scheduler running on different master nodes

- who will lead activity

--scheduler-name- name of scheduler

- name of scheduler

--lock-object-name

사용

- definition

1 2 3 4 5 6 7 8 9apiVersion: v1 kind: Pod metadata: name: nginx spec: containers: - image: nginx name: nginx schedulerName: my-custom-scheduler

상태

kubectl get eventskubectl logs my-custom-scheduler --n kube-system